By Madis Vasser

June 27th-29th saw the first VECTOR (Virtual Environments: Current TOpics in psychological Research) workshop in Tübingen, Germany. I had the great opportunity to take part in the event, give some presentations and hear some interesting ideas. Here is what happened in three days dedicated to virtual reality, psychology, medicine and computer science:

The first keynote was from Gary Bente, one of the older generation VR researchers, starting his work in the 80’s.

His main focus was on social interactions in VR and the role of body language. He warned that although the new wave of consumer products are great,

researchers should be careful about time delays in the system, as they are being sometimes cleverly masked in order to produce smoother overall experiences.

This is great for the user, put a pain for the experimenter who desperately needs to know the precise moment on or the other even took place in the environment.

Gary also proposed a good analogy for the way we currently tend to use VR. Think of it in terms of crutches and shoes. Crutches are a temporary aid to help you do the thing you can usually do anyway (walk around town). Shoes however can take you to completely new places you had never even thought of! Using VR, we can for example really study body language in detail, substituting only small parts of the movement or completely swapping the movements of men and women. Could you tell the difference? According to the data females are significantly better at telling the sex of an avatar solely by looking at the body movement. Book suggestion – Infinite Reality: The Hidden Blueprint of Our Virtual Lives by Blascovich and Bailenson

Next there were a collection of shorter talks around the subject of virtual agents.

What stood out was a presentation on virtual classrooms by Sebastian Koenig from Katana Simulations. His project was about a school environment in VR, so teachers could train different situations before standing in front of an actual crowd. The obstacles in making such an experience were similar to the ones we have seen in our lab – mainly creating many lifelike virtual characters and optimizing them to run on the required specs. Other participants of the workshop also joined in and we had a mini-session on how to best model and animate virtual humans. It turns out that the pipelines in different labs are quite similar, although we developed them independently of each other.

In the evening I held the world premiere of the VREX toolbox! As the first version rolled out, we did a live demo and some example scenarios. People in the audience liked the fact that creating a working VR experiment in Unity was made significantly easier. The question of precise timing still remains, but this is something that is hard to get around while using commercial engines. The toolbox was seen as a good way to quickly prototype experiments and to create initial studies.

The webpage is now also up, where you can find the latest updates, download the free project and send feedback – vrex.mozello.com

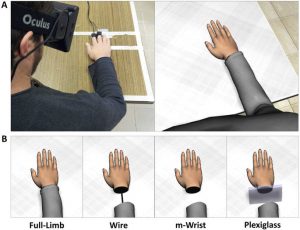

The second keynote titled “If truly I err, I am” by Emmanuele Tidoni looked at different ways to present the virtual avatar. Although there were many studies looking at different hand models, either connected to the body or not, none of them gave much training time before asking the study subject if they felt ownership of the hand. The results showed that a hand connected to the arm is better than a “floating” alternative. This struct me as odd, since most current VR games use floating hands and I can say for sure that after some time these representations feel like your own hands. Can you have fine motor control without felt ownership of the limb?

Keynote 3 by Andreas Mühlberg was on VR exposure therapy. Thought I got from the talk: VR therapy works as good as real, sensory input is essential, multiple environments help to generalize the effects and adding wind to VR always helps. What I got stuck on was the point about perception being essential, yet many VR studies look quite horrible from a graphical point of view. So if even these crude versions work as well as the real thing, maybe a realistic VR exposure could work BETTER than real life? Could we use VR as shoes, not as crutches?

In the middle of the second day I was already known as the guy who always moans when there are no shadows present in some virtual environment. I had a hard time understanding why the quality is so lacking in psychological science. One response was that different experiments call for different quality standards. To me this sounds as if different psychological studies could get by with different levels of oxygen in the room. When doing an active task, you probably need more air, but for a simple questionnaire situation, we could surely tone it down? Sure, the participants are slightly suffocating, but they can still fill the questionnaire… So my idea is to have at least some common baseline, because the study subjects have seen the current level of video games and their expectations about visual finess (and the level of oxygen) are not low! The other response was that science is not well funded, thus we can’t have nice things, as “it takes a team of 50 specialized highly paid people a whole year to produce a proper AAA video game.” Fair point. But science experiments are hardly ever an open-world epic adventure that devours your life for hours on end. Usually they are confined in a small area and a few minutes in duration. Having excellent quality in these very helping constraints is not unreal!

There was also a panel session focusing mainly on delays in the computer system, ways to not improve graphical quality, fears of never crossing the uncanny valley of virtual characters (and virtual environments too!). Also, at the same time a paradoxical concern was raised about VR getting too realistic, turning people addicts and messing with their sense of true reality. Gary Bente said it best: the tech is coming anyway, there is no question about it. Now how can we educate people and use the opportunities for good purpose? I’d also like to add that given the number of users of some widely distributed technology (e.g. mobile phones and Pokemon Go), the number of people with problems is a tiny fraction.

Day three was packed with tutorials and lab visits. One of the highlights was to see the Max Planck institute and associated facilities. The VR department is astounding – compared to our 4×3 tracking space, they have 12×12! Plus all the other VR motion platforms, such as the cable systems seen on the right. I also had an eye-to-eye experience with SMI eye tracking and also heard their presentation on the topic. They have some quite impressive tech for the new commercial headsets, with quite high prices. At their joint session with VT+ they used great terminology do distinguish between virtual and real therapies: “in vivo” vs “in-virtuo”! I also got a nice overview of different tracking systems in general, so for future reference:

Active – led markers (like oculus)

Passive – reflective markers (like optitrack)

Markerless – object recognition (like kinect)

Electromagnetic – magnets (like hydra)

Acceleration based – IMUs (like perception neuron)

Lasers – base stations (like HTC Vive)

The sessions ended with my own presentation on making epic experiments with the Unreal Engine. Although 1.5 hours was clearly not enough to fully show the capabilities of this game engine, it was enough to “convert” some listeners into believers of shadows in VR. I tried to tie in topics from previous days – for example there was a discussion if the tools used in making virtual worlds are any fun themselves. UE definitely is! I also tried to address the problem of delays – one issue for researchers had been that you experience random frame drops here and there that mess up the timing. This could be reduced by making sure that your experiment ALWAYS runs at the required frame rate (90fps for modern devices). This means optimizing and Unreal has some excellent features for that. Lastly I introduced the blueprint scripting system that can really empower a researcher who doesn’t know code. So my point was that labs do not need 50 people on game design to actually achieve great results – all that is needed is a principle decision that

VR experiments should at least have shadows.

Overall it was a great event with lots of new ideas and contacts. It seems that VR is here to stay and disrupt the psychological world both from the patient and the professional side. And I’m glad that the team at the University of Tartu CGVR lab is on the forefront. Many thanks to Jaan Aru and Raul Vicente for making the trip possible.

Next there were a collection of shorter talks around the subject of virtual agents.

Next there were a collection of shorter talks around the subject of virtual agents.

In the middle of the second day I was already known as the guy who always moans when there are no shadows present in some virtual environment. I had a hard time understanding why the quality is so lacking in psychological science. One response was that different experiments call for different quality standards. To me this sounds as if different psychological studies could get by with different levels of oxygen in the room. When doing an active task, you probably need more air, but for a simple questionnaire situation, we could surely tone it down? Sure, the participants are slightly suffocating, but they can still fill the questionnaire… So my idea is to have at least some common baseline, because the study subjects have seen the current level of video games and their expectations about visual finess (and the level of oxygen) are not low! The other response was that science is not well funded, thus we can’t have nice things, as “it takes a team of 50 specialized highly paid people a whole year to produce a proper AAA video game.” Fair point. But science experiments are hardly ever an open-world epic adventure that devours your life for hours on end. Usually they are confined in a small area and a few minutes in duration. Having excellent quality in these very helping constraints is not unreal!

In the middle of the second day I was already known as the guy who always moans when there are no shadows present in some virtual environment. I had a hard time understanding why the quality is so lacking in psychological science. One response was that different experiments call for different quality standards. To me this sounds as if different psychological studies could get by with different levels of oxygen in the room. When doing an active task, you probably need more air, but for a simple questionnaire situation, we could surely tone it down? Sure, the participants are slightly suffocating, but they can still fill the questionnaire… So my idea is to have at least some common baseline, because the study subjects have seen the current level of video games and their expectations about visual finess (and the level of oxygen) are not low! The other response was that science is not well funded, thus we can’t have nice things, as “it takes a team of 50 specialized highly paid people a whole year to produce a proper AAA video game.” Fair point. But science experiments are hardly ever an open-world epic adventure that devours your life for hours on end. Usually they are confined in a small area and a few minutes in duration. Having excellent quality in these very helping constraints is not unreal! Day three was packed with tutorials and lab visits. One of the highlights was to see the Max Planck institute and associated facilities. The VR department is astounding – compared to our 4×3 tracking space, they have 12×12! Plus all the other VR motion platforms, such as the cable systems seen on the right. I also had an eye-to-eye experience with SMI eye tracking and also heard their presentation on the topic. They have some quite impressive tech for the new commercial headsets, with quite high prices. At their joint session with VT+ they used great terminology do distinguish between virtual and real therapies: “in vivo” vs “in-virtuo”! I also got a nice overview of different tracking systems in general, so for future reference:

Day three was packed with tutorials and lab visits. One of the highlights was to see the Max Planck institute and associated facilities. The VR department is astounding – compared to our 4×3 tracking space, they have 12×12! Plus all the other VR motion platforms, such as the cable systems seen on the right. I also had an eye-to-eye experience with SMI eye tracking and also heard their presentation on the topic. They have some quite impressive tech for the new commercial headsets, with quite high prices. At their joint session with VT+ they used great terminology do distinguish between virtual and real therapies: “in vivo” vs “in-virtuo”! I also got a nice overview of different tracking systems in general, so for future reference: The sessions ended with my own presentation on making epic experiments with the Unreal Engine. Although 1.5 hours was clearly not enough to fully show the capabilities of this game engine, it was enough to “convert” some listeners into believers of shadows in VR. I tried to tie in topics from previous days – for example there was a discussion if the tools used in making virtual worlds are any fun themselves. UE definitely is! I also tried to address the problem of delays – one issue for researchers had been that you experience random frame drops here and there that mess up the timing. This could be reduced by making sure that your experiment ALWAYS runs at the required frame rate (90fps for modern devices). This means optimizing and Unreal has some excellent features for that. Lastly I introduced the blueprint scripting system that can really empower a researcher who doesn’t know code. So my point was that labs do not need 50 people on game design to actually achieve great results – all that is needed is a principle decision that VR experiments should at least have shadows.

The sessions ended with my own presentation on making epic experiments with the Unreal Engine. Although 1.5 hours was clearly not enough to fully show the capabilities of this game engine, it was enough to “convert” some listeners into believers of shadows in VR. I tried to tie in topics from previous days – for example there was a discussion if the tools used in making virtual worlds are any fun themselves. UE definitely is! I also tried to address the problem of delays – one issue for researchers had been that you experience random frame drops here and there that mess up the timing. This could be reduced by making sure that your experiment ALWAYS runs at the required frame rate (90fps for modern devices). This means optimizing and Unreal has some excellent features for that. Lastly I introduced the blueprint scripting system that can really empower a researcher who doesn’t know code. So my point was that labs do not need 50 people on game design to actually achieve great results – all that is needed is a principle decision that VR experiments should at least have shadows.