Our researchers recently published an article titled “Attention is withdrawn from the area of the visual field where the own hand is currently moving“. What follows is an human readable summary of the exciting work.

The core question is simple: how does the brain work in terms of computation? One theory that has been gaining popularity in recent years is called predictive coding. In essence it postulates that all that the brain is mostly doing is predicting its next moment-to-moment inputs. As the sensory info arrives, it is being compared to the predictions and if something doesn’t match, an error signal is generated to fix the prediction for next time. This is an effective approach, as familiar actions can be predicted well and resources are not wasted on processing low level data that is not surprising anyway (also known as active inference). Think about not being able to tickle yourself. The good thing about the predictive coding theory is that its experimentally testable. If we for example think about our own hand movements, we are hardly ever surprised when they emerge in our field of view. The brain controls the body and knows exactly what to expect when the hand is moved. Therefore the movement of the hand should be less “interesting” for the brain. How to actually test that?

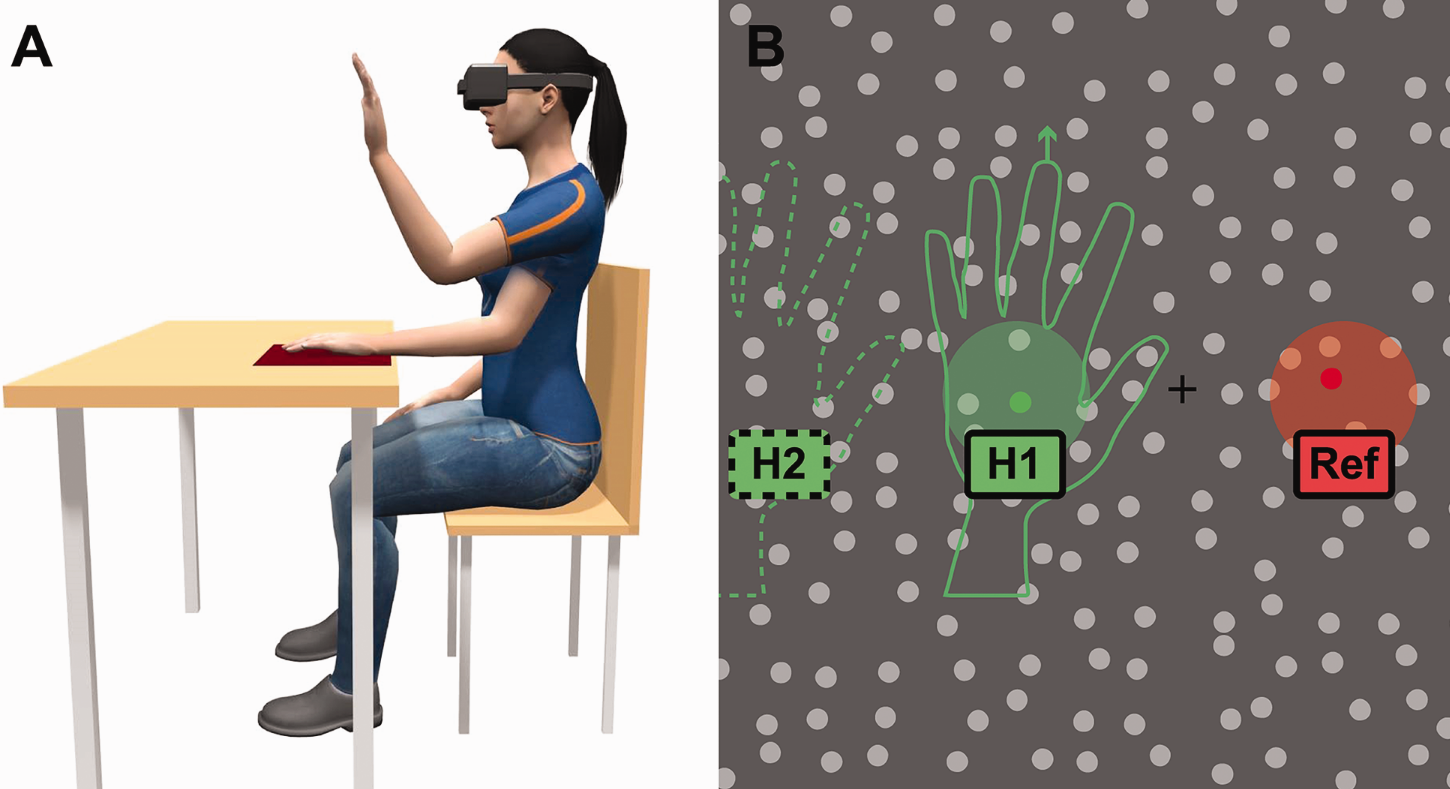

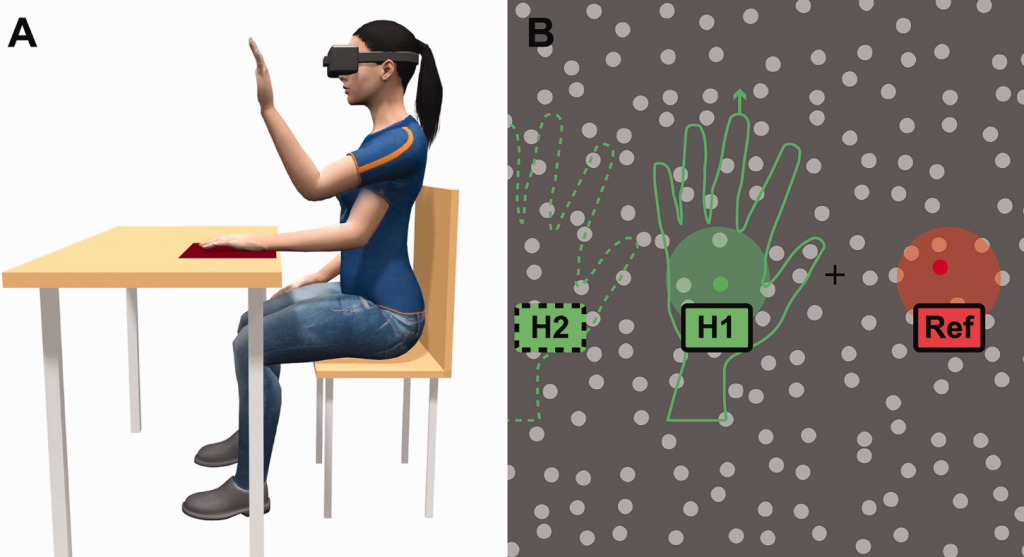

Here’s what we did in a nutshell: the participants wore the Oculus Rift CV1 headset with a Leap Motion hand tracker. The task was simple – lift your left hand up in front of your face and click the response button with your right hand as soon as you notice a tiny change in your visual field (figure 1). The change was either a tiny shift in movement or color in one of the dots on screen. Sometimes the change happened behind the participants hand, while other times it was either in a random location or in a mirrored position on the right. Here’s the kicker: the participants hand was completely invisible – there was nothing actually blocking the view when a stimulus was “behind” a hand.

Figure 1. Left: the movement path is shown – participants were actully not three-handed. Right: the dot matrix inside the headset. Changes could happen inside the H1, H2 or Ref region, or randomly. Hand were always completely invisible.

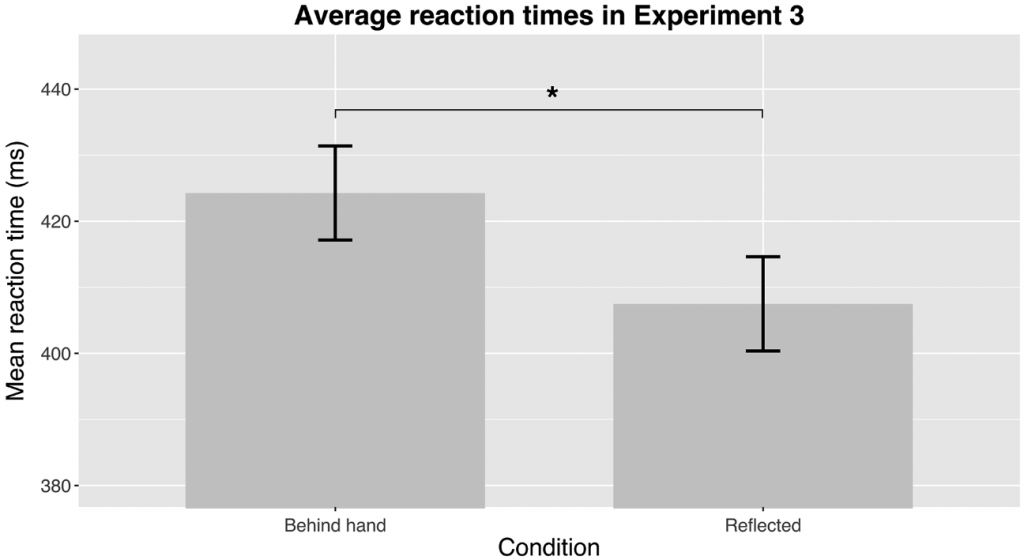

As expected by active inference, we saw a statistically significant difference between the conditions where the change was behind an invisible hand or anywhere else! This held true both for movement and color changes. Below is a plot from the third experiment on 29 subjects where color was investigated (figure 2). The difference is small, but the interesting fact is that there is a difference at all – remember, visually there was nothing distracting participants to react to the change. So one possible explanation is that the brain was actively inhibiting the part of the visual field where the hand should have been, as this info is not “news”. Thus reaction times from stimuli in that part of the visual field were slightly longer.

Figure 2. Left: stimulus behind the invisible hand. Right: stimulus mirrored to the right side, where no hands were present.

For us computational neuroscientists this first result is looking very promising and we are already building upon the current research to further our understanding of the brain. For everyday practical use we could say that now you have a plausible explanation when you can’t find something, only to discover you have been holding it in your hand the whole time!

Citation:

Kristjan-Julius Laak, Madis Vasser, Oliver Jared Uibopuu, Jaan Aru; Attention is withdrawn from the area of the visual field where the own hand is currently moving. Neurosci Conscious 2017; 3 (1): niw025. doi: 10.1093/nc/niw025